RESEARCH

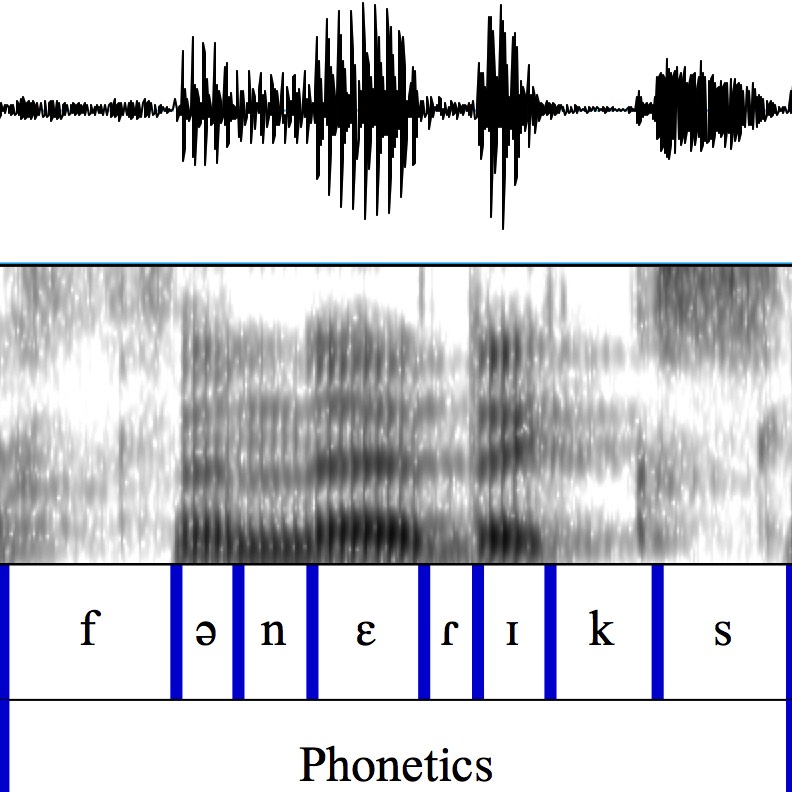

In the CLR Phonetics Lab, our research is mainly focused on speech production and pronunciation, but it sometimes involves speech perception. Within speech production, some of our research projects focus on articulatory phonetics, while others focus on acoustic phonetics. For studies on acoustic phonetics, we mainly use open-source acoustic analysis software such as Praat, and we have access to an anechoic chamber and a soundproof studio for quiet recording if needed. For studies on articulatory phonetics, we have an ultrasound machine to display real-time images of the tongue moving during speech. We also have video, and have access to a Vicon motion capture system for tracking the lips, jaw, eyebrows, etc. during speech. For deep learning, we have a powerful computer (Deep Learning Box). For reaction time (RT) research, we have E-prime software with Chronos hardware. Finally, we have always taken an interest in studying the Aizu dialect of Japanese and trying to preserve it for future generations.